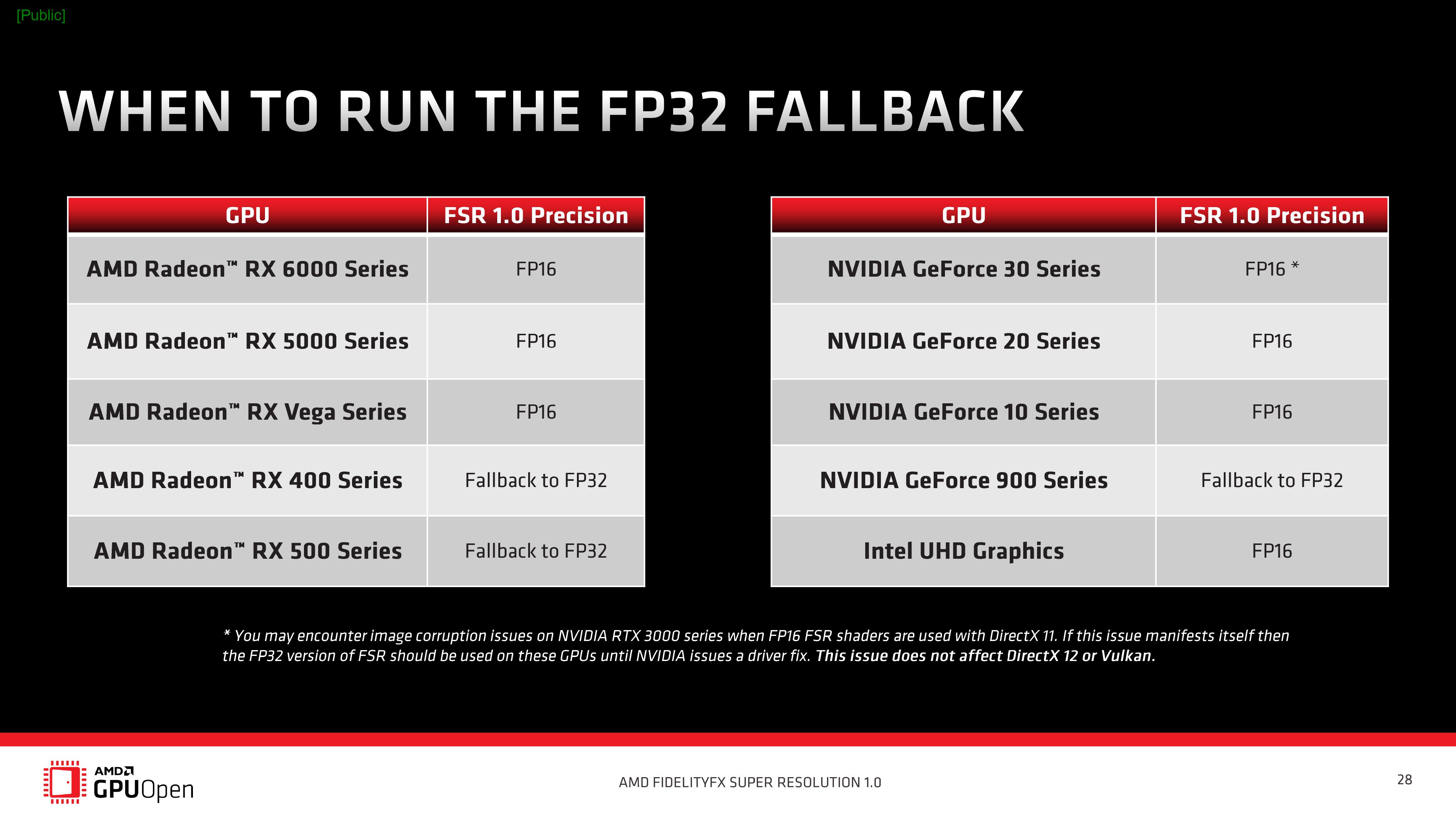

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else) - The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation

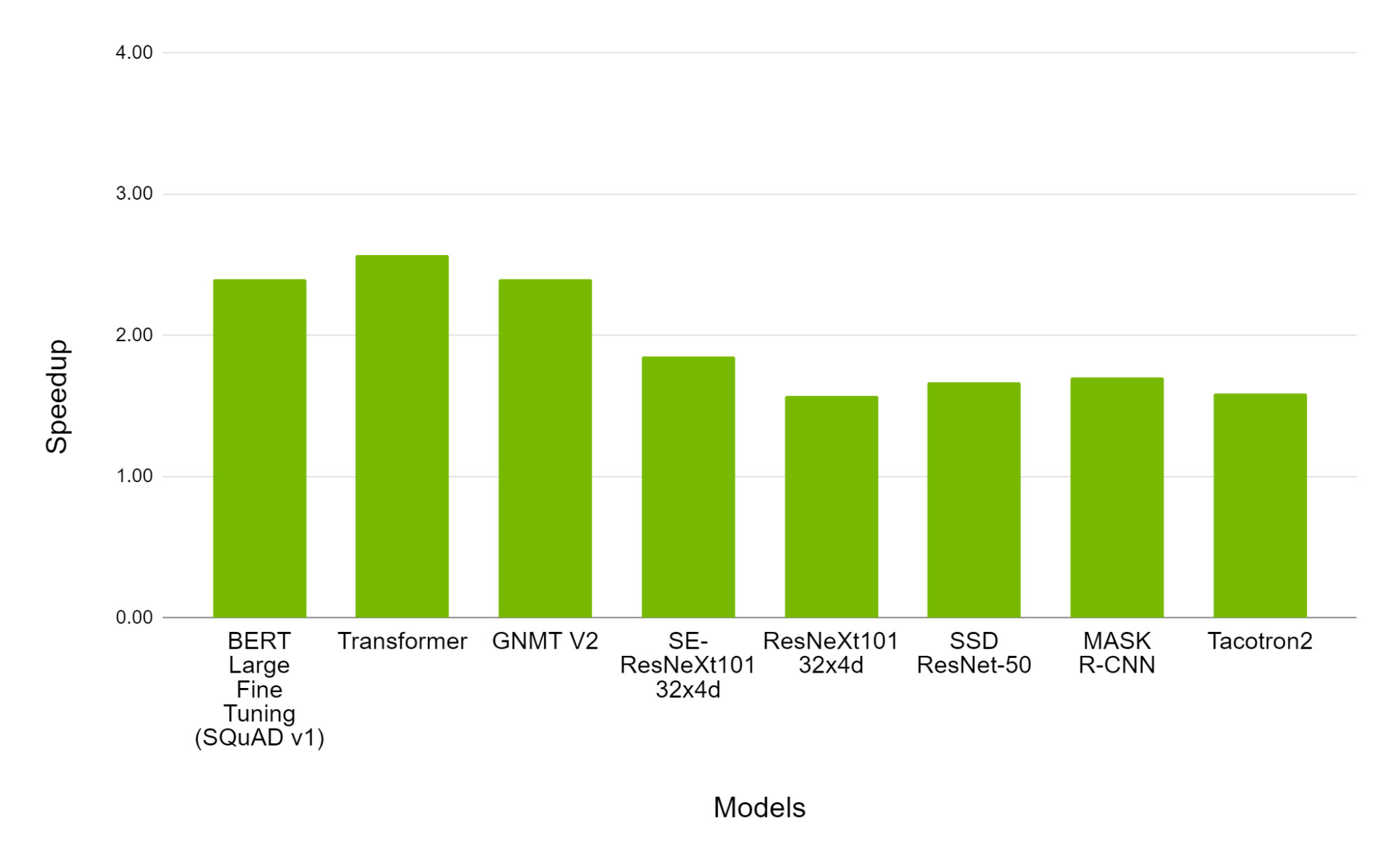

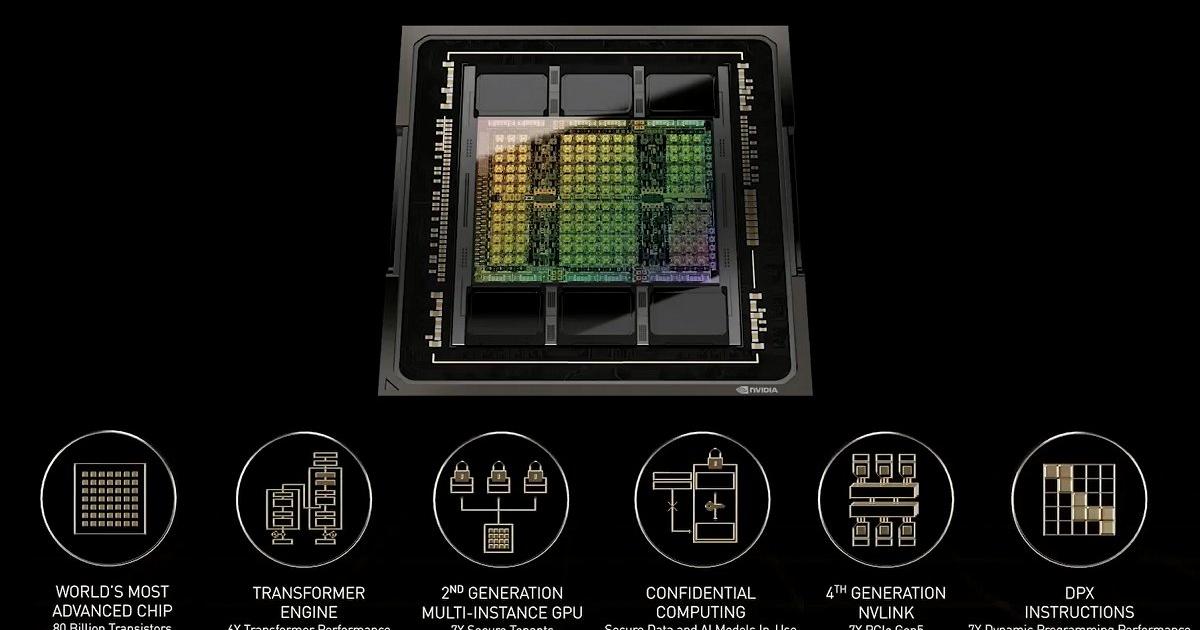

NVIDIA's 80-billion transistor H100 GPU and new Hopper Architecture will drive the world's AI Infrastructure - HardwareZone.com.sg

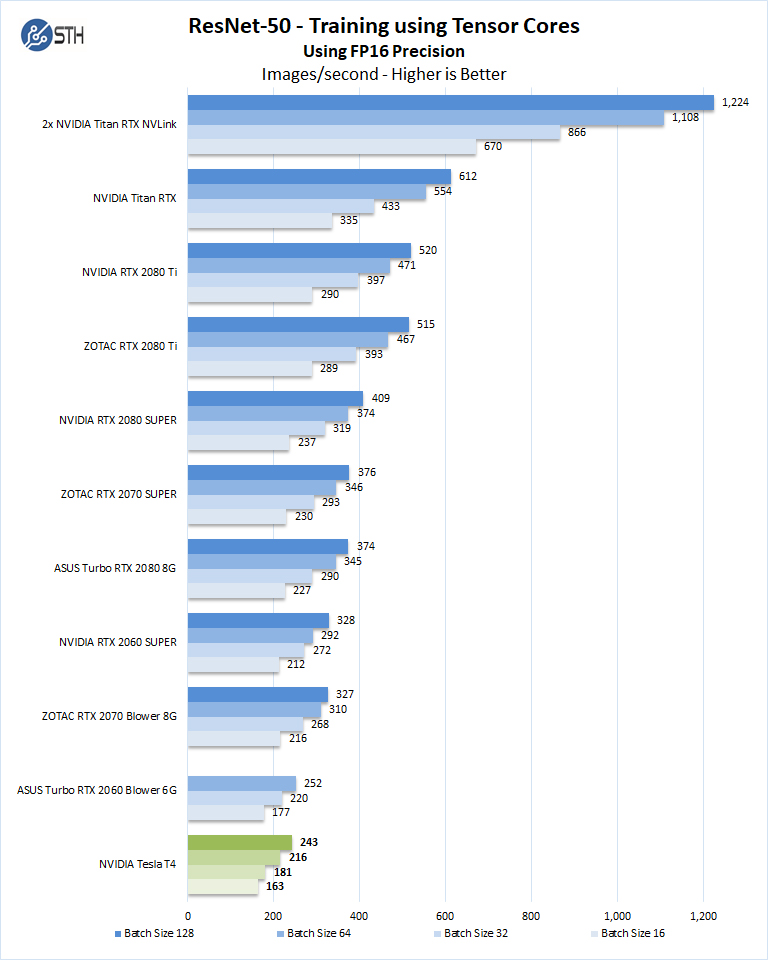

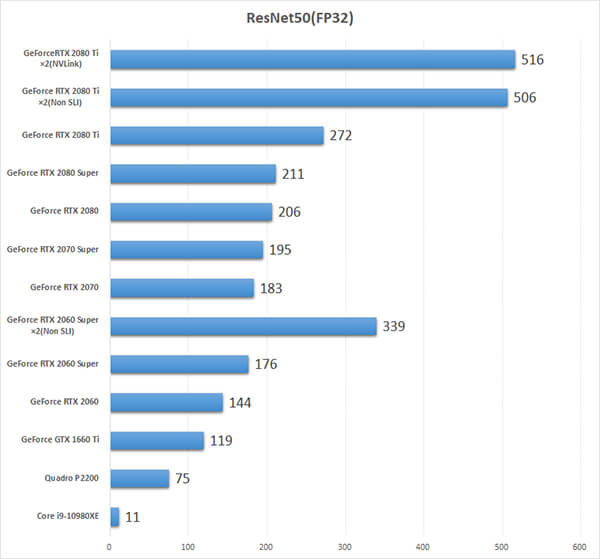

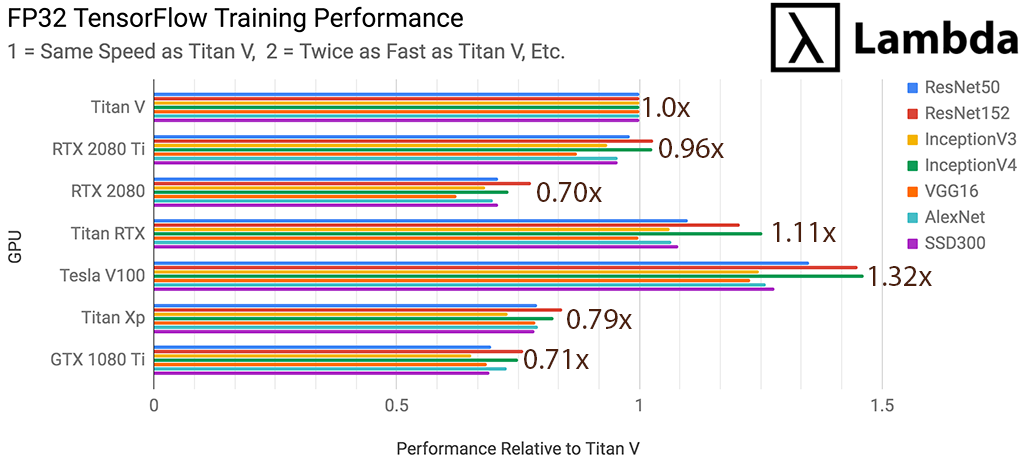

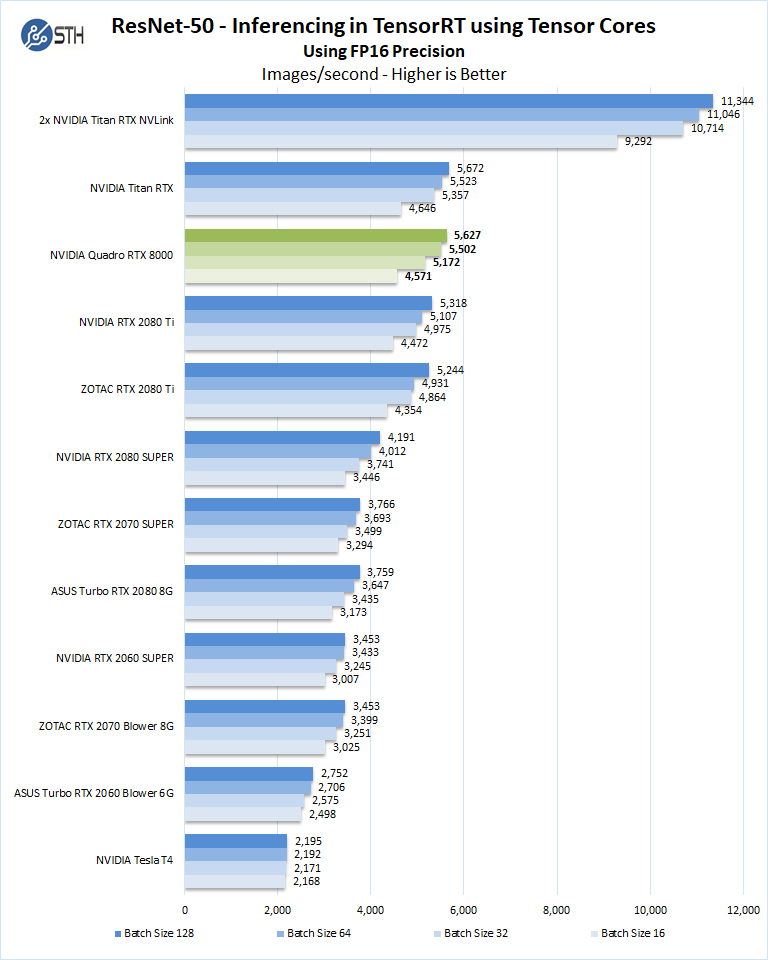

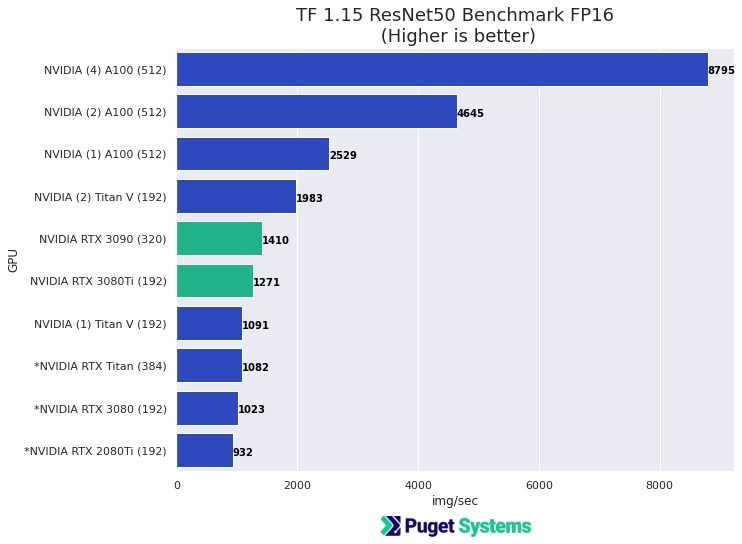

TensorFlow Performance with 1-4 GPUs -- RTX Titan, 2080Ti, 2080, 2070, GTX 1660Ti, 1070, 1080Ti, and Titan V | Puget Systems